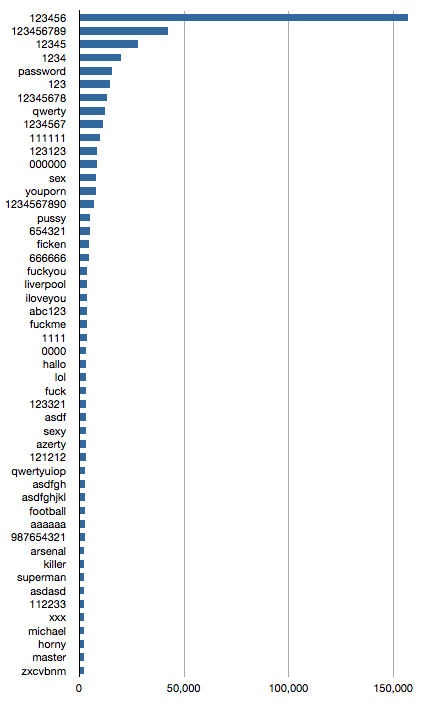

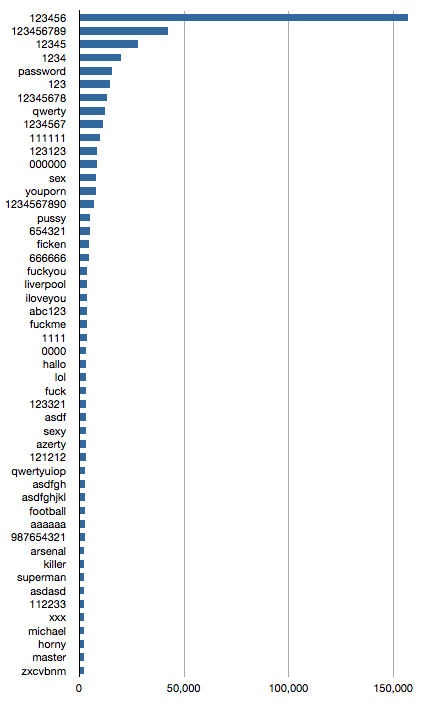

YouPorn Passwords

Feb 23 2012

Customer details of registered users of the website YouPorn were recently revealed after a third-party chat service "failed to take the appropriate precautions in securing its user data”. According to various reports, it resulted in an estimated 1 million user names, email addresses and plain-text passwords being exposed, much to the embarrassment of those individuals I’m sure. My tests saw closer to 2 million unique usernames extracted from the files so I’m not sure why the discrepancy.

Since the data is now effectively in the public domain, I did a quick analysis of the passwords. While some may be attributed to the nature of the content of the aforementioned site, others are the usual suspects, with “123456” taking top honors by a large margin.

Here are the top 50.

Since the data is now effectively in the public domain, I did a quick analysis of the passwords. While some may be attributed to the nature of the content of the aforementioned site, others are the usual suspects, with “123456” taking top honors by a large margin.

Here are the top 50.

Increased Bandwidth = Slower UDP scans?

Feb 07 2012

I recently finished up a marathon debugging and research session attempting to solve an issue in a network scanning application where, if the code was run on Windows Server 2003, scanning a large number of UDP ports completed significantly quicker than when the exact same software was run on Windows Server 2008 R2. The tests even went so far as to be run on the exact same hardware, re-imaging the box between the 2 configurations. And yet the problem persisted.

I had seen KB articles and patches available for WIN2K8R2 that allegedly fixed some latency issues around UDP processing, but we were at SP1 and this was already in place. Regardless, the code that was responsible for sending and receiving the UDP packets on the network was written to use WinPcap, the low level packet capture driver for Windows, and thus should not have suffered from any (comparatively) higher-level changes made in the TCP/IP stack between operating systems.

All manner of extensive tests were run to try to nail down why the scans took so much longer on 2008 R2.

To cut a very long story short, the issue was with the network card driver.

Now usually when I investigate networking problems like this, the very first thing I do is check the NIC settings to make sure they are all correct. Usually the NIC should be in "auto-configure" or "auto-detect" mode and operating in full-duplex. Indeed I had briefly initially looked at both system's NIC properties and both reported to be running at 1 Gbps full-duplex. Nothing to see here. Move on. About the only difference in this area was that the 2K3 box was using older Intel PROSet drivers. Surely nothing major had changed or been fixed since those were released though, right?

Fast forward a number of agonizing days of further debugging and instead of trying to find out what's wrong in the application code, I attempt to see if the problem can be reproduced and isolated with something that does not involve our code at all. Enter the wonderful tool Iperf.

Using Iperf in UDP mode it quickly became apparent something was wrong. Throughput tests from the 2K3 system were half that of the WIN2K8R2 box when tested against the same remote Iperf server. Not just roughly half, but almost exactly half! That's too much of a coincidence.

So what could account for half the throughput? Well, if the NIC was not really operating in full-duplex mode and instead was running in half-duplex, that would be half the bandwidth.

I switched both NICs into 100 Mbps full-duplex mode (curiously the configuration properties of the WIN2K3 NIC did not offer a manual selection for 1 Gbps full-duplex despite the summary claiming it was already running that way) and re-ran the Iperf tests. Amazingly both systems now produced exactly the same throughput!

A slew of application tests were launched again with the new manual NIC settings and the problem was gone. Both systems finished their large UDP scans in consistently similar times.

The NIC properties were lying. It wasn't running full-duplex on the WIN2K3 system.

Problem solved.

One last mystery though. How can increased bandwidth (on the WIN2K8R2 box running 1 Gbps full-duplex versus WIN2K3 running 1 Gbps half-duplex) result in a large UDP scan that runs slower?

To explain this I have to explain how UDP scanning works against typical modern targets.

Most modern operating systems restrict the rate at which they inform the sender if a UDP port is closed (see RFC 1812 and others). They will, for example, only respond once every second with a "closed" ICMP Destination Port Unreachable message regardless of however many probes we've sent that target. Any ports that we've sent probes to that we don't get an explicit "closed" (or "open" for that matter) response from we have to wait for a timeout period in case the packet is slow to arrive.

So imagine we send 100 UDP probes and it takes us 10 seconds to do that. This would represent the 2K3 system running in half-duplex. During that time we may receive 10 "closed" replies (at a rate of 1 every second), assuming that the vast majority of scanned ports are actually closed, which they are in practice. So for 10 of our sent probes we receive the "closed" response pretty much immediately and can log the associated port’s state and be done with it. For the other 90 ports we have to wait for our timeout period to expire and have to retry again in one of the following passes, because we don't know if the packet was lost or filtered or whatever.

Now imagine the same scenario where sending our 100 probes takes instead 2 seconds. This would represent the 2K8R2 system running in full-duplex (OK, I know this isn’t double the throughput but I’m just trying to make a point). During that time we may only receive 2 "closed" responses, leaving 98 ports to have to wait for a timeout.

Scale this up to 65536 ports against dozens of targets and you can see how this mounts up.

With the fixed NIC settings this is expected and correct behavior. The fact that it was faster with the "broken" driver was just a quirk of that configuration. Had the NIC been working correctly we would not be here today discussing this problem since all scans would have taken the same (longer) time.

I had seen KB articles and patches available for WIN2K8R2 that allegedly fixed some latency issues around UDP processing, but we were at SP1 and this was already in place. Regardless, the code that was responsible for sending and receiving the UDP packets on the network was written to use WinPcap, the low level packet capture driver for Windows, and thus should not have suffered from any (comparatively) higher-level changes made in the TCP/IP stack between operating systems.

All manner of extensive tests were run to try to nail down why the scans took so much longer on 2008 R2.

To cut a very long story short, the issue was with the network card driver.

Now usually when I investigate networking problems like this, the very first thing I do is check the NIC settings to make sure they are all correct. Usually the NIC should be in "auto-configure" or "auto-detect" mode and operating in full-duplex. Indeed I had briefly initially looked at both system's NIC properties and both reported to be running at 1 Gbps full-duplex. Nothing to see here. Move on. About the only difference in this area was that the 2K3 box was using older Intel PROSet drivers. Surely nothing major had changed or been fixed since those were released though, right?

Fast forward a number of agonizing days of further debugging and instead of trying to find out what's wrong in the application code, I attempt to see if the problem can be reproduced and isolated with something that does not involve our code at all. Enter the wonderful tool Iperf.

Using Iperf in UDP mode it quickly became apparent something was wrong. Throughput tests from the 2K3 system were half that of the WIN2K8R2 box when tested against the same remote Iperf server. Not just roughly half, but almost exactly half! That's too much of a coincidence.

So what could account for half the throughput? Well, if the NIC was not really operating in full-duplex mode and instead was running in half-duplex, that would be half the bandwidth.

I switched both NICs into 100 Mbps full-duplex mode (curiously the configuration properties of the WIN2K3 NIC did not offer a manual selection for 1 Gbps full-duplex despite the summary claiming it was already running that way) and re-ran the Iperf tests. Amazingly both systems now produced exactly the same throughput!

A slew of application tests were launched again with the new manual NIC settings and the problem was gone. Both systems finished their large UDP scans in consistently similar times.

The NIC properties were lying. It wasn't running full-duplex on the WIN2K3 system.

Problem solved.

One last mystery though. How can increased bandwidth (on the WIN2K8R2 box running 1 Gbps full-duplex versus WIN2K3 running 1 Gbps half-duplex) result in a large UDP scan that runs slower?

To explain this I have to explain how UDP scanning works against typical modern targets.

Most modern operating systems restrict the rate at which they inform the sender if a UDP port is closed (see RFC 1812 and others). They will, for example, only respond once every second with a "closed" ICMP Destination Port Unreachable message regardless of however many probes we've sent that target. Any ports that we've sent probes to that we don't get an explicit "closed" (or "open" for that matter) response from we have to wait for a timeout period in case the packet is slow to arrive.

So imagine we send 100 UDP probes and it takes us 10 seconds to do that. This would represent the 2K3 system running in half-duplex. During that time we may receive 10 "closed" replies (at a rate of 1 every second), assuming that the vast majority of scanned ports are actually closed, which they are in practice. So for 10 of our sent probes we receive the "closed" response pretty much immediately and can log the associated port’s state and be done with it. For the other 90 ports we have to wait for our timeout period to expire and have to retry again in one of the following passes, because we don't know if the packet was lost or filtered or whatever.

Now imagine the same scenario where sending our 100 probes takes instead 2 seconds. This would represent the 2K8R2 system running in full-duplex (OK, I know this isn’t double the throughput but I’m just trying to make a point). During that time we may only receive 2 "closed" responses, leaving 98 ports to have to wait for a timeout.

Scale this up to 65536 ports against dozens of targets and you can see how this mounts up.

With the fixed NIC settings this is expected and correct behavior. The fact that it was faster with the "broken" driver was just a quirk of that configuration. Had the NIC been working correctly we would not be here today discussing this problem since all scans would have taken the same (longer) time.